Poetry Generation Using Markov Chains

Far from current generation Large Language Models (LLMs) like LLaMA, GPT or BERT, Markov chains offer a simple yet effective method for generating poetry. By analyzing a corpus of text (a poem), we can create a probabilistic model that predicts the next word based on the current word. This post explores a Poetry Generator that uses Markov chains to showcase a blend of technology and creativity.

Poetry Generation Using Markov Chains

Crafting verses with code

The Poetry Generator (poetry.humbertowoody.xyz) is in itself a web application designed to showcase the process of analyzing text, creating a probabilistic model from it, and using that model to generate new text. The application uses Markov chains

to generate poems that mimic the style of the original corpus. This project highlights the intersection of technology and creativity, providing a platform where users can visualize the data-driven process behind poetry generation.

Visit the Poetry Generator (poetry.humbertowoody.xyz) to provide a poem, see the transition probabilities between words, and generate new poems based on the input text.

So, what are Markov chains?

Markov chains are mathematical models that predict the next state based on the current state. In the context of text generation, each word represents a state, and the transition to the next word depends on the current word. Here’s how it works:

- Corpus Analysis: The application analyzes the provided poem to extract the words and their relationships. For example, given the phrase “the quick brown fox,” the words “the” and “quick” are connected, as are “quick” and “brown,” and so on.

- Transition Matrix: A transition matrix is created, where each cell represents the probability of transitioning from one word to another. This way, the model learns the likelihood of each word following another.

- Text Generation: Starting with an initial random word, the Markov chain generates the next word based on the transition probabilities. This process continues until the poem reaches a specified length.

By repeating this process, the Poetry Generator creates new poems that capture the stylistic patterns of the original text.

A visual journey through the data

The project provides the user with three visualizations for the transition probabilities between words: a heatmap, a network graph, and a relationship matrix. These visualizations offer different perspectives on the data and highlight the connections between words in the corpus.

Relationship Matrix

This section displays the original code object that represents the transition probabilities between words. Each word contains a list of possible next words and their probabilities. This matrix provides a detailed view of the data structure used to generate the poems, allowing users to inspect the underlying model.

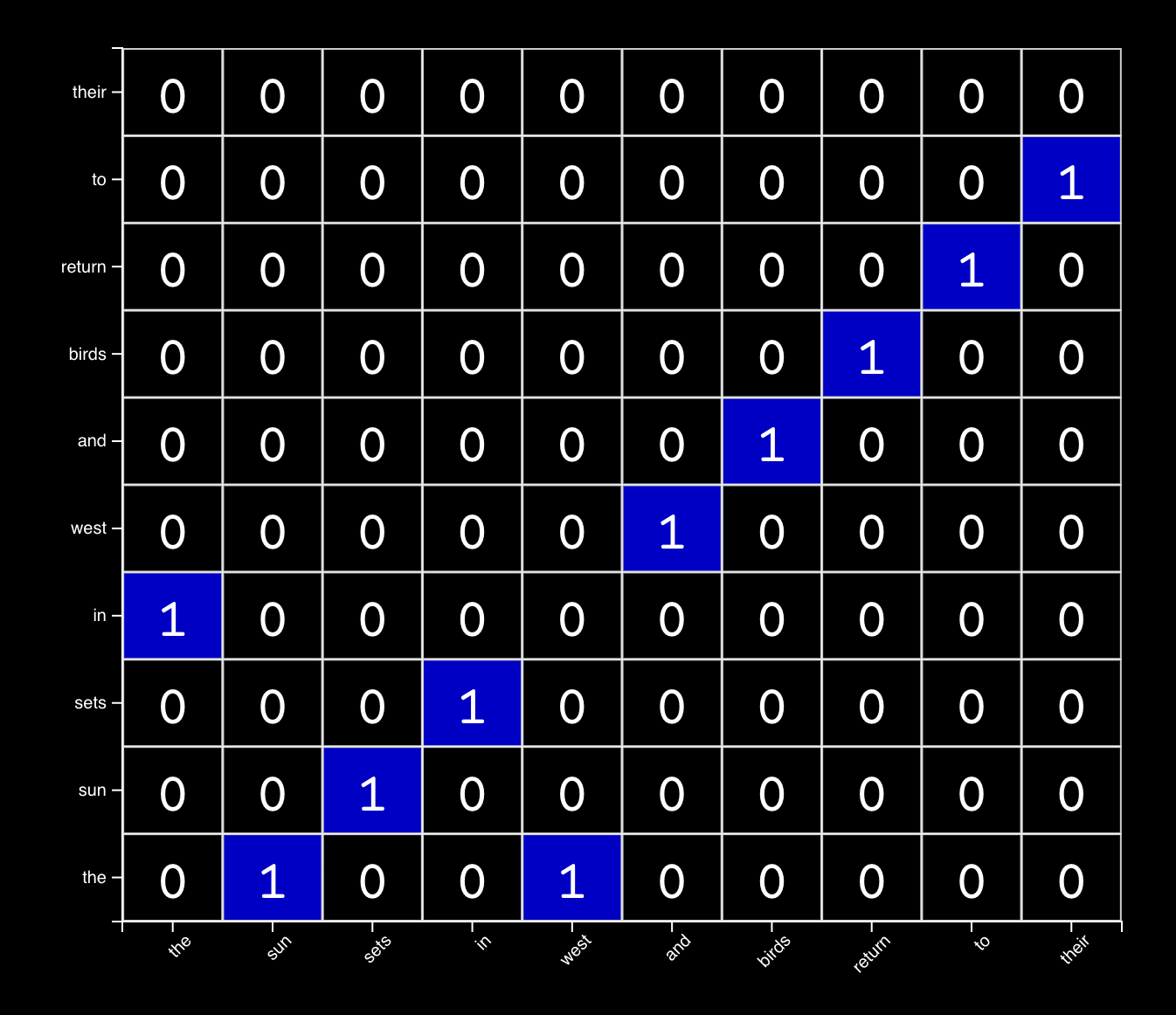

For example, for the following input poem:

1 2 | The sun sets in the west And birds return to their nest |

The relationship matrix would look like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | { "the": { "sun": 1, "west": 1 }, "sun": { "sets": 1 }, "sets": { "in": 1 }, "in": { "the": 1 }, "west": { "and": 1 }, "and": { "birds": 1 }, "birds": { "return": 1 }, "return": { "to": 1 }, "to": { "their": 1 }, "their": { "nest": 1 } } |

This matrix represents the transition probabilities between words in the corpus, with each word mapping to the possible next words and their probabilities.

Heatmap

The heatmap visualizes the transition probabilities between words, with each cell representing the likelihood of transitioning from one word to another. The color intensity indicates the strength of the relationship, allowing users to identify patterns and connections within the text.

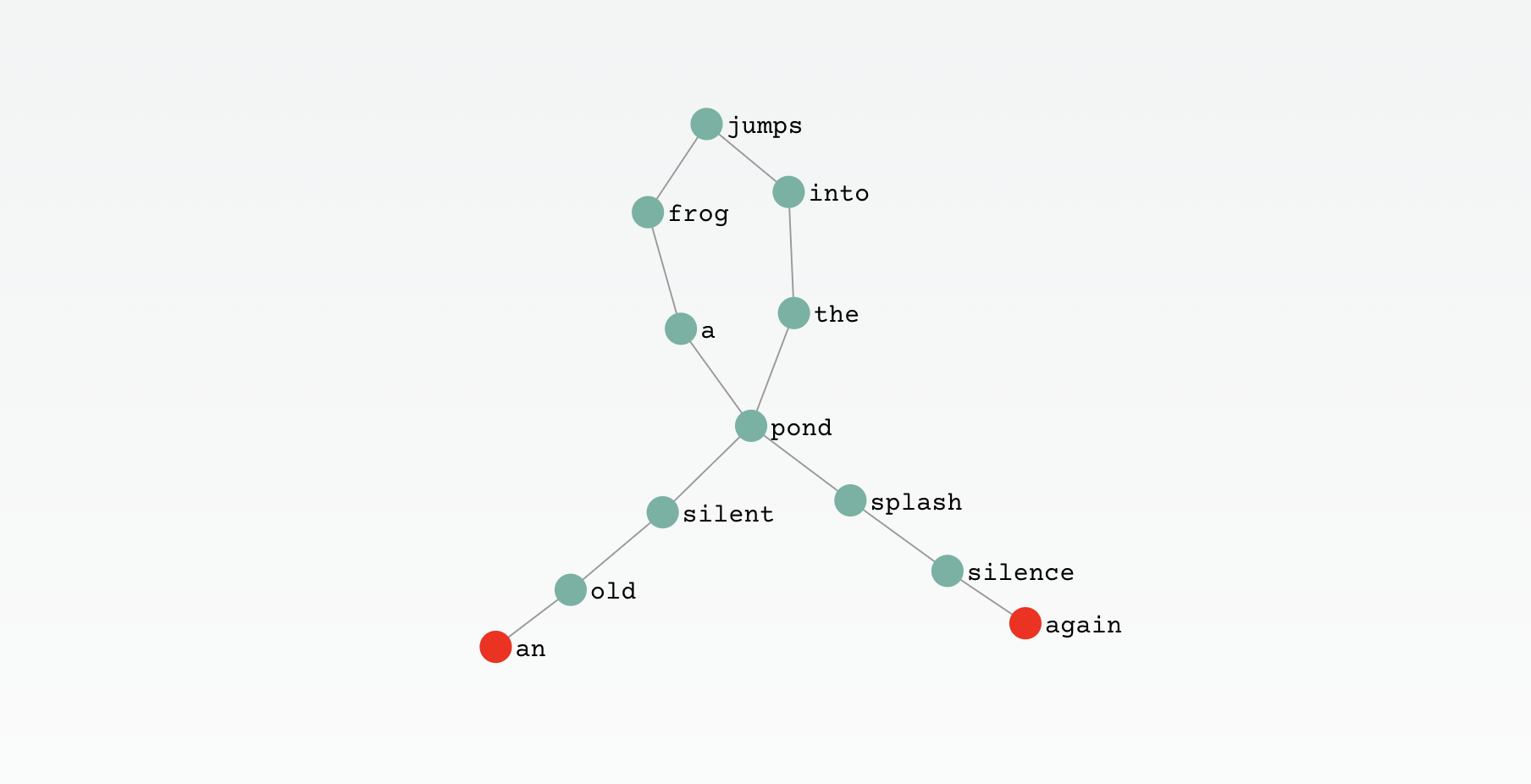

Network Graph

The network graph illustrates the words as nodes and the transition probabilities as edges between them. This visualization provides a clear representation of the connections between words, making it easier to identify clusters and relationships within the text. It’s also interactive, allowing users to explore the network, drag nodes, and zoom in for a closer look.

Comparison to Current Generation LLMs

While Markov chains offer a straightforward approach to text generation, current generation Large Language Models (LLMs) like GPT-3.5 provide a more sophisticated and nuanced method. Here’s a comparison:

Simplicity vs. Complexity

- Markov Chains: Relatively simple and easy to implement, Markov chains rely on direct probabilities between words. They are effective for capturing local patterns but struggle with long-range dependencies.

- LLMs: LLMs, such as GPT-3.5, use deep learning techniques to understand context and generate text. They can capture complex patterns and maintain coherence over longer passages, making them more versatile.

Training Data and Flexibility

- Markov Chains: Require a smaller corpus and are less resource-intensive to train. They are ideal for specific tasks like poetry generation where simplicity and artistic value are prioritized.

- LLMs: Trained on vast amounts of diverse data, LLMs are flexible and can handle a wide range of text generation tasks, from poetry to technical writing.

Output Quality

- Markov Chains: Produce text that mimics the style of the original corpus but may lack depth and coherence over longer texts.

- LLMs: Generate high-quality, coherent text that can adapt to various contexts, making them suitable for applications requiring sophisticated language understanding.

Conclusion: Exploring the Poetic Potential of Technology

Markov chains offer a fascinating glimpse into the intersection of technology and creativity. Through the Poetry Generator, users can experience firsthand how a simple probabilistic model can craft delightful verses. This project not only showcases the capabilities of Markov chains but also invites us to ponder the broader implications of machine-generated art. Whether you’re a tech enthusiast or a poetry lover, I hope this journey through data-driven poetry inspires you to explore the poetic potential of statistical models.

Visit the Poetry Generator to try it out yourself and create your own unique poems. As always, thanks for reading!